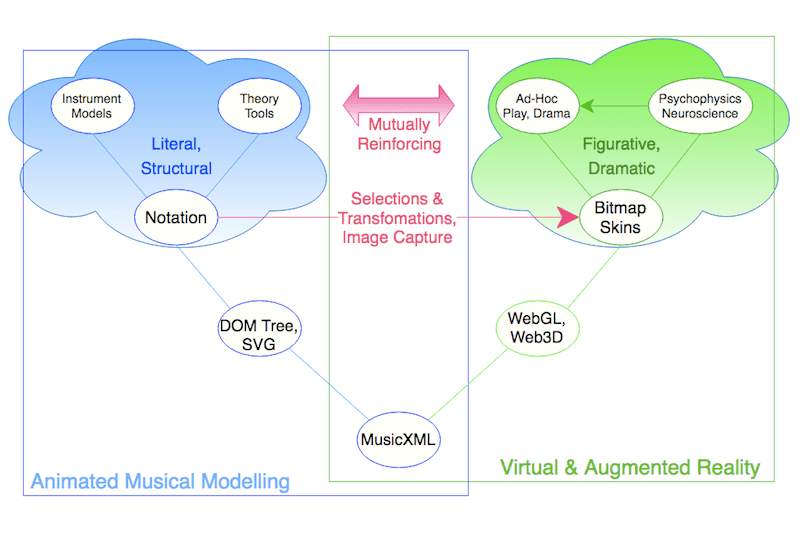

Music notation has at first glance been thoroughly commoditized. Whether using browser DOM (SVG) or WebGL (Web3D), the future seems to lie higher up the value chain - in music visualization. The motivation is simple: musicians 'hear' the written note, learners seek visual models.

The move towards this graphical 'added value' appears, however, to be being hindered. In this context, the two questions explored here are:

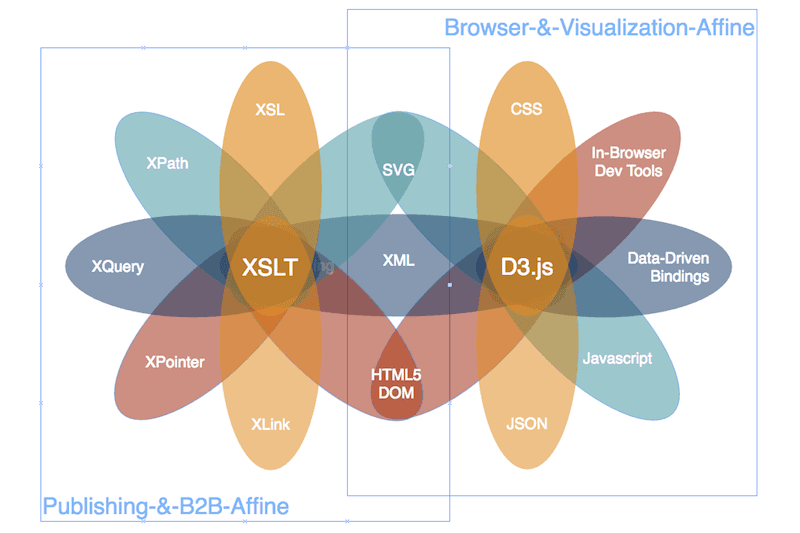

The XSLT constellation has until now been viewed as the 'go-to' technology for MusicXML processing. It's claimed clean, well-proven, reasonably quick and elegant. (Disclaimer: I've never used it -> 'popup expert').

Sluggishness of progress in both of the above areas, however, suggest a problem in the current technology stack - one or more technical disconnects somewhere among the most widely used exchange format, MusicXML, the technologies used to build SVG or Web3D models, and those used to manipulate them in the browser.

Could this obstruction lie -at least in part- in the very technology widely being used to generate notation in the browser?

XSLT

XSLT forms the base of a set of software libraries widely used to transform MusicXML into SVG (a language describing sophisticated graphics of use with the browser DOM (Domain Object Model).

In this post, we try to identify any disconnects, figure out where the benefits of the XSLT technology constellation end and possible impediment begins, and, as exemplified by the banner image above, explore a powerful, data-driven alternative.

In data visualization, the terms 'transformation' and 'transition' are often (and wrongly) used interchangeably.

Transformation refers to a conversion of data container format (such as from MusicXML to SVG, our focus here), or of the end properties (position, size, shape, color, viewpoint or scale) of a graphical screen object following application of a deterministic function to each point in it's underlying data set.

Transition, on the other hand, describes an action itself (the deterministic function) used to achieve a transformation. Several transitions may be employed (chained) to achieve a transformation.

MusicXML's Reach

We looked (in separate post) at the shared role of MusicXML in the 'literal' DOM/SVG vs 'figurative' WebGL/Web3D worlds, and how imagery captured in the former could be repurposed in the latter.

Because it offers such a huge spectrum of opportunity for online social value delivery, this constellation should be the first focus of anyone working towards solutions in music teaching or learning.

MusicXML And The Browser DOM

With MusicXML as standard or default exchange format at the root of current score-building capabilities, in a browser DOM context, we currently have a choice between at least two clearly differentiated open source technology stacks.

On the one hand the dominant, specification-led, rule-based XSLT software constellation, and on the other a test-driven, fly-by-the-seat-of-your-pants javascript approach.

At our disposal on the javascript side, however, are some powerful data visualization libraries - including their flagship, D3.js.

MusicXML And WebGL

Other than for bitmap-based notation, MusicXML appears to have little WebGL / Web3D foothold whatsoever. This can perhaps be ascribed to the following:

MusicXML And XSLT

The XSLT specification defines XSLT as "a language for transforming XML documents into other XML documents" - of which both MusicXML and SVG are widely-used examples.

The XSLT Programmer's Reference (2nd Edition) suggests XSLT can be used to:

So, basically from more or less anything to XML, and from XML to more or less anything..

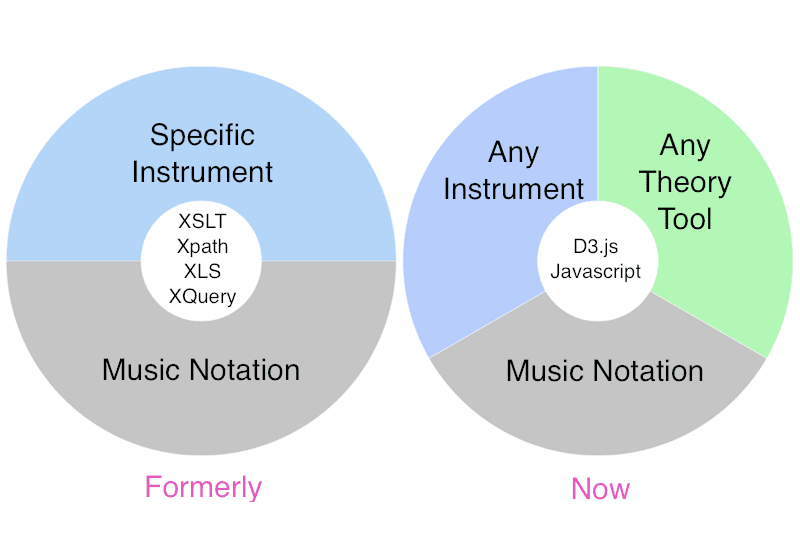

With MusicXML adopted as a W3C standard for music exchange, XSLT and it's suite of tools (XSL, XPath, XQuery etc) have attained the status of a transformational music technology. With XSLT's help, the marriage between MusicXML and SVG notation has, you might say, been made.

There are others approaches, but these tend to focus (using, for example, Domain Specific Languages, or DSLs) on expressing the underlying structures of music more succinctly, in the end offering a level of visual interactivity only marginally different from what is already available using XSLT.

MusicXML And D3.js

According to Interactive Data Visualization for the Web, D3 is intended primarily for explanatory as opposed to exploratory visualizations, the latter of which help you discover significant, meaningful patterns in data.

This is an important distinction - and one that our layered, highly flexible configuration processes for instruments and theory tools modeling is (with D3.js help) intended to overcome.

D3's main uses are:

Server:

Implemented with care in an aggregator platform, to the above list we can add:

Parsing in a D3.js context is layered process concerned ultimately with the creation of direct, graphical bindings. Parsing of MusicXML, however, is a fairly complex and layered process aimed at transforming serial data into timeline-based parallel parts and voices.

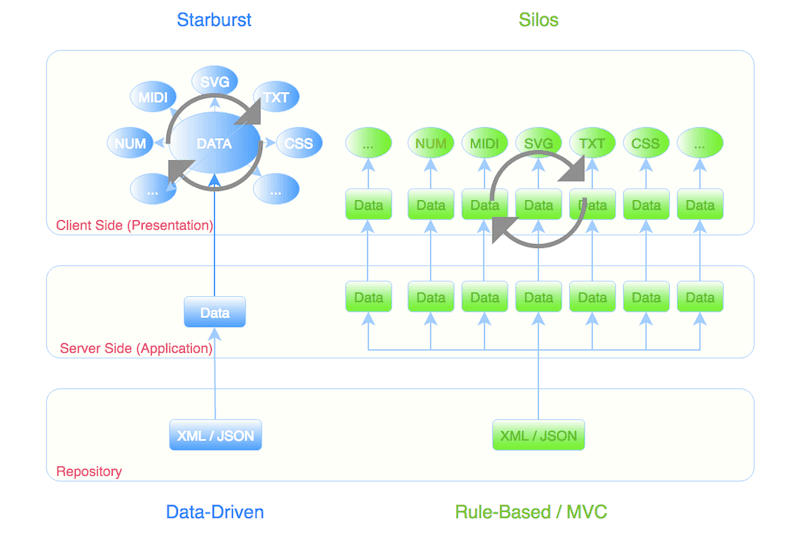

Moreover, as hinted at above, we want both parsing and any resulting SVG notation generation done on the server, leaving only it's manipulation (transformation during, for example, playback) in the browser.

XSLT's Technical Disconnect

It's somehow telling that XSLT barely features in reviews of, or searches on, tools for data visualization. This is only reinforced by the fact that XSLT's role in music seems to have been capped at the level of notation.

XSLT's potential should, logically, at least extend to the generation and driving of complex, score-driven, exchangeable, time-synchronized and highly interactive on-screen visual SVG animations.

XSLT certainly facilitated the creation of beautifully typeset notations in a wide variety of fonts, but possibly at the cost of interactivity and flexibility.

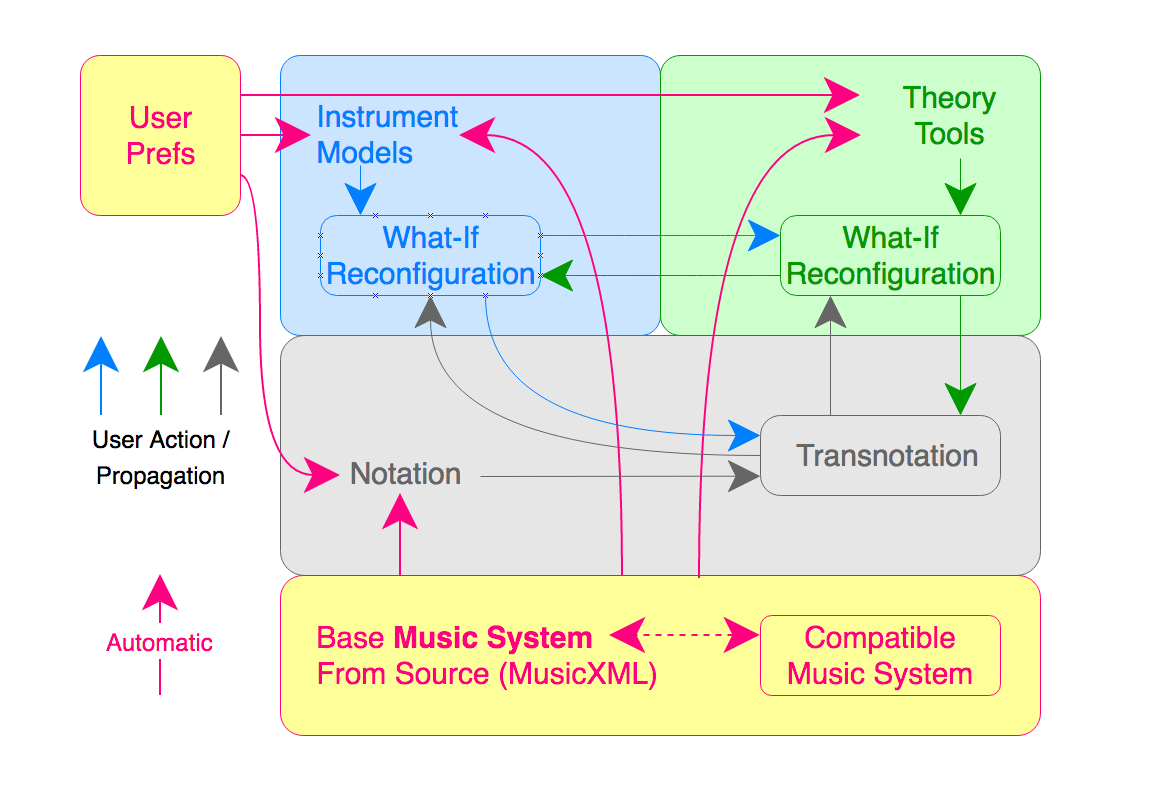

Though the DOM/SVG opportunities have by no means been exhausted, the problem is only exacerbated as we move towards a WebGL/Web3D context. The following diagram may help illustrate this:

Where any instrument / theory tool disconnect might be attributed to technical complexity, MusicXML's use in the Web3D provisioning chain is still in it's infancy.

I have seen it claimed there is currently no great interest in WebGL music applications. Given the size of the music learning industry, I find this difficult to believe.

What seems more likely is that no-one has yet been able to formulate a technical concept bridging the gap between MusicXML and WebGL.

XSLT Limitations

So what are the limitations of XSLT? Mike Bostock, one of the creators of D3.js sums these up thus:

"Extensible Stylesheet Language Transformations (XSLT) is another declarative approach to document transformation. Source data is encoded as XML, then transformed into HTML using an XSLT stylesheet consisting of template rules. Each rule pattern-matches the source data, directing the corresponding structure of the output document through recursive application. XSLT’s approach is elegant, but only for simple transformations: without high-level visual abstractions, nor the flexibility of imperative programming, XSLT is cumbersome for any math-heavy visualization task (e.g., interpolation, geographic projection or statistical methods)'.

"Cumbersome". Mmm. These remarks (admittedly made before the release of XSLT version 2.0) have profound implications for music visualization, the missing 'visual abstractions' referring to the direct and locally manipulable bindings between data and (say) SVG. Interpolation weaknesses are presumably mentioned because they form the basis for smooth (jitter-free) animations.

There have of course been substantial improvements (explored further below) since then. As of 2010, for example, all leading browsers support client side XSLT transformation. The question is how close these come to the direct data bindings of D3.js.

XSLT And Synchronization

Transforming MusicXML into SVG notation according to embedded time tags is an area where XSLT implementations still seem to have the upper hand.

The XSLT Programmer's Reference suggests that one of the benefits of XSLT is "separating (decoupling) data from presentation", in practice achieved by each different stylesheet rendering data in a different way, and resulting in 'one data source, multiple resulting formats, and conversion between the formats'.

The moment data and presentation are decoupled in a music visualization context, however, we invite a number of issues: synchronization drift between audio and visual elements, less directly accessible data, data fragmentation (and hence a greater likelihood of duplication).

The best guarantee of audio-visual synchronization, on the other hand, is to keep sound and image generation intimately bound to the unique underlying data, and directly associated with hardware timers. Using D3.js, this is pretty straightforward.

XSLT And Flexible Configuration

With the XSLT approach, however, each product format -whether data or document- is effectively a silo. This may be a valid requirement in an MVC environment where the application is in control of presentation (one set of data being tailored for example to mobile vs desktop use, a conventional hierarchical web site, the peculiarities of individual browsers, or simply different user roles), but is inappropriate in a data-driven scenario, where data and it's (sometime multiple) usages are closely bound, the resulting presentation type, whether audio, music notation (essentially a scatterplot), tree, choropleth, map or bar graph is under direct user control.

This disconnect would also conflict with our layered approach to instrument and theory tool configuration, where a change at a lower level (the scale length of a stringed instrument, for example) impacts everything (tunings, fret positions, fingerboard roadmap etc) above it.

The XSLT stack, then, remains suited to rigorously targeted B2B (business-to-business) communications, allowing conversion between a variety of document formats, but less so to reusable and extendable musical animations and the ad-hoc control actions of users in a shared, P2P (peer-to-peer) music environment.

Both XSLT and D3.js rely on an XML parser to convert the XML document into a tree structure. Where XSLT and D3.js differ, however, is that XSLT is designed to navigate an own tree modeled from XML, whereas D3.js is used to directly navigate the browser's DOM tree. These models, though similar, are not the same. Indeed, the XSLT approach results in two distinct tree models on the way to an SVG display - with additional processing overhead.

In both cases, however, we might be forgiven for expecting the final results to be broadly similar.

XSLT And Rule-Based Music Glyph Placement

I have no experience with XSLT, but one thing in particular sounds an alarm: MusicXML has in recent years come to cater to hard-coded music glyph positioning information in MusicXML files.

This suggests one of two things: either XSLT does not lend itself well to scalar (algorithmic) positioning, or -however this positioning is calculated- it tends, in certain situations, to break down.

This is strange for one simple reason: best musical typesetting practice is a long established and well documented skill. Time division ('div') information contained in MusicXML should, in combination with the accumulated notes at any given position, be entirely enough to satisfy scalar/algorithmic positioning - in both the horizontal and the vertical.

XSLT vs D3.js

XSLT is one of a few close analogues of D3.js. Both are document transformers, or 'visualization kernels'.

XSLT, a declarative language (describes not how to do a task, but what to do) with templating capabilities which lend it some functional characteristics.

D3.js -a functional programming language- looks vaguely imperative - but it's behavior is feels declarative..

Many existing notation systems are built on an XSLT foundation, so in theory much of the work involved in readying music exchange files for new applications (in areas such as in-browser, SVG-based music visualization, or WebGL-based augmented or virtual reality web applications) may well already have been done.

Why only 'may'? All the above demand accurate synchronization of data, image and audio, and flexible, powerful image visualization and transformation capabilities, yet XSLT quick hits limitations in these areas. The following diagram hints at why:

So the problem may in part due to browser-technology affinities:

Because of their close data-to-DOM bindings, data-driven applications respond instantly and dynamically to changes in a data set. Though XSLT is already a declarative language (describes what you want to do, rather than how to do it), can it really be considered data-driven? Given it's expressive shortcomings ("cumbersome") compared to D3.js, on certain types of client-side transformation, it may be obliged to rebuild some presentation structures from scratch. Here, I wish I knew more.

Can we begin to identify some potential XSLT bottlenecks? Our first cut might be:

In effect, the technology, while suited to document generation, may not be so suited to the dynamics of data visualization - and especially where these are driven from a musical timeline. Effectively, every time an XSLT rule is fired, presentation elements are rebuilt.

This would be especially telling in an environment such as ours, where user-led dynamic configuration is an issue.

In sum, this is beginning to push us in the following direction:

Let's explore this possibility a little further..

MusicXML Parsing Using Javascript Or D3.js

Conceptually, XML parsing can be thought of at two distinct levels: the trivial level of simple XML data extraction (answering questions such as 'how many times does 'xxxx' appear in the file'), and the more complex re-ordering and positioning of elements according to some other, 'higher-level' dimension (such as MusicXML's 'div' timeline).

Any javascript / D3.js approach faces the same challenges. Indeed proprietary, time-based parsing of MusicXML using javascript is what currently distinguishes the product 'Soundslice' from other music learning environments. It sticks, however, to what are effectively single-part instrumental music, thereby avoiding the challenges of multi-voice playback.

To use D3.js to generate musical notation using SVG, then, we are obliged either to select from and transform the SVG output of existing XSLT solutions, or parse MusicXML from first principles.

With complex (and in a sense recursive) time dependencies in MusicXML, the latter is a non-trivial task.

D3.js And Control

The raw material of data visualization libraries such as D3.js are arrays, often the product of parsing a large batch of data in a text format such as JSON.

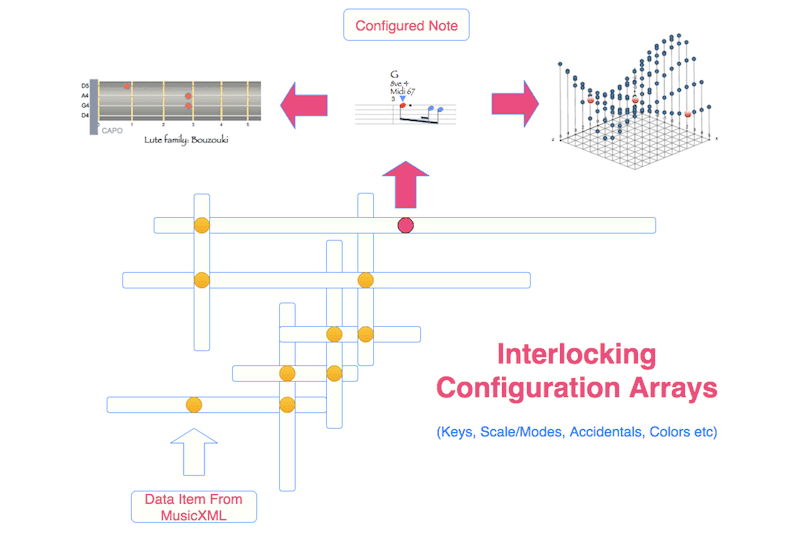

With complex data sets, these can result in array scaffolding, where the value found at a given index in one array acts as index to another. The arrays are, in this sense, interlocking.

Were we to visualize such interlocking arrays, we might see something like the following. From the contents of a MusicXML file, interlocking arrays (representing key, number of parts and voices, instrument types, number of notes in an octave and their individually assigned color codings) build a lattice ultimately spitting out the algorithmically generated position and dimensioning characteristics not just of individual notes, but of entire, populated voice, part and other sections.

Choosing a value from an array (such as number of notes or tones per octave) may resolve several dependencies. For example, having resolved the modular base and temperament or intonation (12 for midi, i.e. western or classical equal temperament, but there are many others in use worldwide), we can determine which color scales to use, the fret layout of a lute family instrument, which modal scales apply, and the spatial relationships in a lattice or tonnetz theory model.

Feeding off these arrays, D3.js builds, layer for layer on the server side, interface components (some hidden, some visible) which can be grouped and/or assigned classes and ids for subsequent (client-side) selection purposes.

Based on these selections, be they of part, voice, phrase, bar, notes at a particular time division or indeed individual notes, a wide range of visually enthralling transformations can be made. These have (potentially) huge implications for the ad-hoc, labyrinthine and on the whole more dramatic visual narratives of WebGL.

Moreover, the precision, power and flexibility of these mechanisms have impact at five distinct configuration or control levels:

Even in the context of a user's local environment, we're talking of a massive flexibility boost:

Here we see two types of configuration change in action: the defaults, driven both by the musical source (here MusicXML) and user default environment settings, and any directly user-controlled and propagated 'what-if' type changes.

Already this simple scenario opens up huge potential for musical exploration: comparing instrument configurations, 'best-try' fitting of notations to instruments from a different musical (configuration) culture, comparing theory tool specializations etc. These are explored in more detail in a series of more recent posts, but the point here is that they are enabled by and built on a data-driven base.

XSLT And D3.js - Together?

XSLT is powerful tool in the parsing and transformation of MusicXML, D3.js in creating direct data-to-visualization binding and transformations.

All this prompts the question whether, using XSLT, rather than decoupling, you can aggregate data into the published SVG where it can be accessed and processed by more powerful selection and transformation libraries.

D3.js has, for example proven, itself perfectly capable of integrating with Web3D declarative languages, so perhaps there is scope here for some data-driven magic.

Clearly, to integrate a library such as D3.js with the product of notation created with the XSLT suite is going on the one hand to require considerable knowledge of both domains, and on the other a presents a high analytical and naming harmonization entry bar.

Nevertheless, moves have been made to bring an easier marriage of XSLT and D3. One such is JSXML, which are a set of XSL stylesheets that transform XML into JavaScript code equivalent to JSX, decoupling view and controller code, and staying closer to a classic HTML/CSS/JavaScript workflow. JSXML is easy to parse (it uses XML) and can easily be re-targeted to different rendering engines (React, Inferno, D3, etc‥).

On the whole, because it allows direct transformations on every visual element in the DOM heirarchy, my feeling is it may be better to proceed with a purely data-driven javascript / D3.js approach. Moreover, D3 has, with D3.express, gone 'reactive'.

Perhaps the most compelling argument in D3.js's favor, though, lies in the area of P2P (peer-to-peer) interworking in online teaching and learning. D3.js provides for a level of fine-grain, highly flexible selection and transformation control difficult to achieve in any other environment. Indeed, it's selection and transformation capabilities are, in comparison to XSLT, simply spectacular.

Big, brave, open-source, non-profit, community-provisioned, cross-cultural and batshit crazy. → Like, share, back-link, pin, tweet and mail. Hashtags? For the crowdfunding: #VisualFutureOfMusic. For the future live platform: #WorldMusicInstrumentsAndTheory. Or simply register as a potential crowdfunder..

Summary: Pros & Cons In A Data Visualization Context

Stack ➛

XSLT

D3.js

Pro

Contra

In sum, the core issues with XSLT seem to be the lack of direct data bindings (direct manipulation of the document object model), XPath/XQuery is no match for D3.js's powerful selection, transformation and transition capabilities.

In a MusicXML or other exchange format context, these have impact both in a 'vertical' (immediate visualization control) and a 'lateral' sense (P2P interworking). In particular, powerful selection capabilities open up the possibility of activating different selection sets depending on learning needs: for example on the horizontal within a score, controls for playback and looping, in the vertical within a score, controls for note and interval identification.

ANY: Music System ◦ Score ◦ Voice ◦ Instrument Or Theory Tool Config ◦

World Music's DIVERSITY and Data Visualisation's EXPRESSIVE POWER collide. A galaxy of INTERACTIVE, SCORE-DRIVEN instrument model and theory tool animations is born.

Entirely Graphical Toolset Supporting World Music Teaching & Learning Via Video Chat ◦ Paradigm Change ◦ Music Visualization Greenfield ◦ Crowd Funding In Ramp-Up ◦ Please Share

Featured Post

All Posts (*** = Recap)

- User Environment - Preferences and Population

- Comparative Musicology & Ethnomusicology

- Music Visualisation - a Roadmap to Virtuosity ***

- A Musical Instrument Web Repository

- Toolset Supported Learning Via Video Chat

- Key Platform Features ***

- Platform Aspirations

- Aggregator Platform vs Soundslice

- Music, Machine Learning & Artificial Intelligence

- World Music Visualisations And The 3D Web

- Cultivating Theory Tool Diversity

- Cultivating Instrumental Diversity

- Cultivating Notation Diversity

- Cultivating Music System Diversity

- One URL To Animate Them All And In The Browser Bind Them ***

- Music Visualisation: Platform Overview

- Music Visualisation: Challenges

- Music Visualisation: The Key To Structure

- Music Visualisation: Motivation ***

- Music Visualisation: Key Concepts ***

- Music Visualisation: Social Value

- Music Visualisation: Prototype

- Music Visualisation: Catalysts

- Music Visualisation: Platform Principles

- Music Visualisation: Here Be Dragons

- Music Visualisation: Potential

- Music Visualisation: The Experimental Edge

- Music Visualisation: Business Models

- Music Visualisation: Technical Details

- Music Visualisation: (Anti-)Social Environment

- Music Visualisation: Arts, Crafts, Heritage

- Music Visualisation: Politics

- Music Visualisation: Benefits ***

- PROJECT SEEKS SPONSORS

- Consistent Colour Propagation

- Orientation: Genre-Specific Workflows

- P2P Teacher-Student Controls

- Musical Modelling

- Social Media Integration

- Platform Provisioning In Overview

- Generic to Specific Modelling: A Closer Look

- Notation, Standardisation and Web

- Communication: Emotional vs Technical

- Musical Storytelling

- Product-Market Fit

- Monthly Challenge

- World Music Visualisations and Virtual Reality

- World Music Visualisations: Paradigm Change?

- How Does It All Work Together?

- Off-the-Shelf Animations: Musical Uses

- MVC vs Data Driven

- A Reusable Visualisation Framework

- What IS Music Visualisation?

- Questions Music Visualisations Might Answer

- Google Group for World Music Visualisation

- Instrument Visualization: Fingering Controls

- Static, Active, Interactive, Timeline-Interactive

- Visualising Instrument Evolution

- Unique Social Value (USV)

- Instrument Modelling: Lute Family

- Person-to-Person ('P2P') Music Teaching

- Alternative Music Visualizer Scenarios

- Music Visualisation: Why a platform, not just an app?

- Music, Dance and Identity: Empowerment vs Exploitation

- Why No Classification System For Music Theory?

- Musical Heritage, Copyright and Culture

- Visualisation Aggregator Platform - Musical Immersion

- Instrument Models - Complete Configuration Freedom

- Diversity is Health, Contentment is Wealth

- Politics & Provisioning Mechanisms of World Music Learning

- Technology, Tribes, Future and Fallout

- Rule-Based vs Data-Driven Notation

© Copyright 2015 The Visual Future of Music. Designed by Bloggertheme9 | Distributed By Gooyaabi Templates. Powered by Blogger.

Comments, questions and (especially) critique welcome.