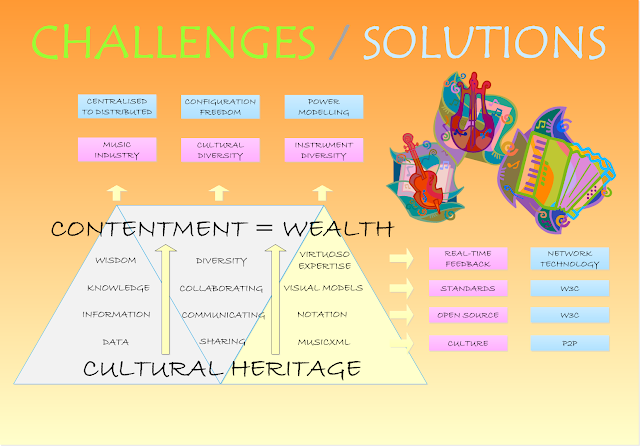

Despite learner's thirst for visual models of musical structure, musical visualization's vast potential -in the form of a single, unifying or aggregator platform- remains entirely untapped. Here a running tally of the main challenges the world music visualization aggregator platform in focus here seeks to answer or is likely to encounter. Resolved, clearly these will have a profound impact on acceptance and advance.

Big, brave, open-source, non-profit, community-provisioned, cross-cultural and dingbats crazy. → Like, share, back-link, pin, tweet and mail. Hashtags? For the crowdfunding: #VisualFutureOfMusic. For the future live platform: #WorldMusicInstrumentsAndTheory. Or simply register as a potential crowdfunder..

Bridging The Gulf Between Written And Aural Traditions

The 'classical' or 'western' music system is a written one, but only one of many in use worldwide. Any form of notation is low hanging fruit for a world music visualization aggregator platform.

Far more technically challenging are the purely aural traditions. Here, questions such as recognition (if at all) of the octave, the number of notes or tones per octave, and whether temperament or intonation are at play have to be resolved before so much as the first visual aid can be presented. This implies advanced sampling technologies -quite possibly leveraging artificial or machine intelligence- and accessing the 'musical stack' in ways and at points at odds with conventional MusicXML (and other exchange format) based notation systems.

Consistency & Homogeneity

Any visualization aggregator platform accepting open source contributions is immediately going to face four central challenges related to consistency or homogeneity:

All lie in the realm of contributor education, best perhaps addressed with clear test and qualification rulesets, best practice and style guides.

Interworking Controls

We have a number of possible approaches to interworking between browsers, but these are restricted in the following ways:

Well that should separate the chaff from the corn.. :-E

Arts And Mathematics

Music is emotion, is taught as a liberal arts subject. Nevertheless, music is also mathematics. Music is an expression of physical behaviors or properties.

One of the major challenges in music visualization is to overcome user bias towards the emotional or the technical. A person with a strong emotional relationship with music may have a hard time accepting the contribution to the whole of underlying mathematical structures and physical properties. Vice-versa, musicians focussed on abstract, technical or functional music forms may no longer see any role for emotion.

A visualization aggregator platform has the task of (where desired) bringing these two together.

Multi-Configuration Instruments

Some instruments -such as accordion- bring an additional modeling challenge in the form of entirely different configurations for the right and left hands.

One accordion hand may be grappling with any of dozens of different chromatic or diatonic (melody-oriented) layouts, the other with any of dozens of chromatic or diatonic (chord-oriented) layouts. In the case of concertina, left and right hands may both be playing melody, or indeed the occasional chord.

Combined, these can result in literally thousands of joint configurations. In modeling terms, we have to functionally separate each hand while looking to the musical homogeneity of the whole. This may sound non-trivial, but in practice the resolution of many problems is inherent in the original score. Both instruments and voices (and hence hands) are often clearly separated and hence individually selectable, the data being fed to the left or right hand as the score implies. Nevertheless, there will always be room for user preference, such as deciding in specific tonal range cutoff points for each hand.

Multiple Technology Stacks

Music notation needs to serve three distinct technology areas: 2D graphical modeling (instruments and theory tools), artificial intelligence (from musical data, identifying and optimising musical information), and the virtual worlds of Web3D (WebAR and WebVR).

Fingerings

The lack of flexibility in assigning fingerings to a piece of music is quite frankly the single most challenging issue in the creation of a music visualization platform.

The problem with fingering annotations lies in the very notation exchange files themselves - and (as the W3C standard for music exchange) in MusicXML in particular. The same problem crops up in other music exchange format files, though.

The 'real-life' relationship between notation and possible fingerings is inescapably ‘one-to-many’, yet MusicXML permits only 'one-to-one'. That in turn forces pointless duplication of what is in all other respects an identical score.

Fingerings in musical interpretation reflect many competing aims, such as elements of style, musical timbre and perhaps above all tension. It is an expression of one player's preferences, and there are always trade-offs to be made. A fingering bringing out the full resonance of the classical guitar may, for example, compromise the fluidity of play - and vice-versa.

Fingerings can be expected to vary again in environments supporting various tunings. Bluegrass, for example, has a strong tradition of alternative tunings. In support of fast, modal (and longitudinally-oriented, minimal chord-shape-change) play, an Irish session guitar player will often prefer a DADGAD or dropped D tuning over the classical (positional, large chord-shape-change play) EADGBE tuning.

Moreover, a given piece of traditional, regional musical may be played on widely differing instruments: here a gaida (bagpipe), there a clarinet, elsewhere a tambura. In Europe and under (for example) gypsy influence, many tunes were well travelled before settling into the settings accessible to us today. Many turn up in different guises in various cultures.

So even where a melody line has survived intact, different musicians will have own ideas about placement. MusicXML, however allows only inline ('built-in' or 'hard-coded') fingering annotations. This is a direct route to cultural impoverishment.

Supporting a one-to-many relationship between score and fingerings implies flexible, perhaps position-only dependent mappings between music exchange and external fingering files - in other words that an external fingering file, where provided, should be automatically accepted and mapped.

This in turn implies some means of uniquely identifying each and every note in a MusicXML file. Various mechanisms are possible, ranging from reference to localising time position (‘div’) in combination with part, voice etc, to the purely structural: for example a numeric tree encoding as exemplified by the Hornbostel-Sachs classification system, or indeed by IP addresses. The latter two rest on the fact that a MusicXML file effectively represents a score as a tree structure, with each node uniquely and numerically identifiable by position in the tree).

This topic certainly merits deeper analysis. For the meantime, you can find more detail >here<

Communication and Collaboration

Many online music learning offerings are island solutions, either divorced from musical inputs, isolated from other tools or heavily focussed on one particular area. Due in part to an divisive 'app culture', communication and the challenges of technical complexity also play a role.

Despite the often common mathematical foundation, whether due to jargon unique to own discipline, overtly challenging tool interfaces, lack of a common and visible framework for the formulation of complex ideas, overt specialization, or simply gaps in knowledge essential to collaboration, online music teaching and learning seems to be falling behind other disciplines in it's exploitation of media and the web.

Technology -and especially that relating to the web, to the cloud- is the meeting place of many music-related disciplines, from the computational or quantitive (physics, musimathics) through the empirical (psychophysics) to the qualitative (anthropology, social studies).

This is exacerbated by the lack of platforms allowing wide musical experimentation, gathering of experience and, ultimately, consensus.

There are of course forums, but these tend to be dominated by an academic establishment quick to challenge any perceived threat to own sovereignty. I would go as far as to say that in stifling new ideas, these are part of the problem.

The moment ideas floated with the desire to spark discussion are written off as 'poor quality answers', you can be fairly sure the central motivation is not curiosity or a concern for quality, but fear.

Latency

Latency in real-time gaming is inherently easier to compensate for than would be the (very much hypothetical) case with real-time music play together. Indeed, games programmers have developed a variety of mathematical techniques (interpolation, prediction, compensation) for simulating synchronised real-time play across the internet.

These rely on the fact that moving bodies have a certain momentum which is conserved through the influence of any other, incidental forces. A running figure, for example, can't be expected to make an instantaneous 90 degree turn.

In music, the equivalent of a 90 degree turn is very much possible. Moreover, our hearing latency is so tiny as to leave little time to apply any correction. Playing music together -even live and directly face-to-face- relies on split-second accommodation of minute changes of pitch, rhythm, attack or delay.

Though nowhere near as daunting as the challenges faced by multiplayer games, the move from local, single-user usage to remote teaching and learning (and even though a one-way information flow) brings it's own latency challenges.

The latencies we are talking of are not those of 2-way synchronization, but the modest yet noticeable corrections needed between streamed video and/or audio, and notation time divisions (divs) following transfer over the internet. We need to be sure of bringing each together and presenting (with the addition of any instrument models or theory tools) a synchronised whole on the receiving end.

Assuming we can find a way of synchronising all our source feeds (which I doubt is quite rocket science), Soundslice has demonstrated that they can be made to play back together perfectly well on the client or slave side. As long as time tokens or markers are present, we should be capable of correct replay.

For our simple, one-way flow scenario, in the worst case, a one-off preparatory scan (either of live play or of a score) by an AI agent could provide more than enough information for predictive correction, using either sampled or synthetic tones. Whether there is any advantage over simply playing with a recording, though, is another matter.. :-)

Teaching and learning, however, need only a one-way connection, simplifying matters considerably. As can be seen in the above diagram, two data flows are envizaged: one for interworking controls and one for media. The latter will need to be synchronised with the notation and other animations on the student (local, or slave) end. This implies establishing some means of synchronization (for example metronome ticks or in-stream time tokenization) at the master end. Looking to established open-source audio tools such as Audacity, this is area where the power of the crowd may work wonders.

There is also the option of manual synchronization by the end user ("Fine Sych" in the diagram), should there still be disparities. With fluctuating network loads, however, this is unlikely to be satisfying.

Graphics Rendering: SVG vs WebGL

The graphical power and flexibility of the aggregator platform rests on two principle foundations: Scalar Vector Graphics and the data visualization library D3.js.

The greatest challenge in this context is that the graphical objects managed by D3.js are located on the browser's Domain Object Model (DOM), and that each DOM element is associated with large collections of data. Every change to these is accompanied by heavy processing overheads - so much so that large, data-rich animations elsewhere have been reported to 'stutter'.

With music in the browser, much of the graphical load occurs on initial display of the notation and tools, and during subsequent and separate (playback-driven) animation. These playback loads involve not thousands, but a limited selection (at most hundreds) of screen objects. With much of the processing underlying transformations and transitions executed in javascript, the actual rendering loads are (in my experience and so far) entirely acceptable.

The problem with graphical objects is that the rendering loads rise exponentially with their proliferation. A large classical orchestral work may conceivably grind to a standstill. Given CPU-intensive background loads, however (such as disk-to-disk copy, scheduled backup), playback display can -as with almost any application- be visibly impacted.

SVG stutter used to be seen routinely in larger animations, but this aspect has since been largely eliminated using hardware-controlled timers, a feature of D3.js added only a year or two ago. Animations driven in this way are significantly less affected by other system loads.

Moreover, with the push towards web-based forms of virtual and augmented reality, especially mobile screen resolutions -and with them CPU and GPU specs- are already pushing limits until recently held by desktop machines. Digital ecosystem producers such as Apple now find themselves in a wild scramble to catch up with production and consumer markets that long ago left them behind.

In parallel, techniques have become available to preprocess (server-side rendering), reduce (shadow DOM) or offload (CPU to GPU) graphics processing.

Hardware capacities continue to rise, and will likely leave rendering problems irrelevant. Because technology will always catch up with ideas, my instinct has been to lay doubts aside and work with the best-of-class technology - in our case D3.js.

should we still hit a rendering wall, what are the alternatives ? We should be looking not to exchange our workhorse library, but to find a faster rendering method.

In this sense, the entire layered instrument or theory tool configuration workflow can remain in place, but instead of rendering to SVG, we could render to WebGL using a library such as Pixi.js. All dimensioning information remains valid, but instead of binding to the DOM elements, we bind directly to low-level bitmapped graphics. You can get a feeling for this approach in >this article< and it's >accompanying implementation<.

So what is the takeaway? Develop with D3.js and cross performance bridges as they arise.

Music System Diversity

Ideally, the music engine at the heart of the system needs to be able to cater programmatically with multiple music systems. In this sense, it needs to be configurable, fast, lightweight and flexible.

Moreover, there are two potential applications: assisting in the generation of the notation from any source (music exchange file format or audio), and recognition of simple forms of musical 'meta-knowledge' useful to a user - such as intervals, modal scales and chord suggestions.

Indeed, to use a construction metaphor, the former can be thought of as structural, the latter effectively cladding.

To my knowledge there is no single javascript library which caters both to equal temperament and just intoned music systems. Currently, this platform uses the excellent teoria.js, the hope being that someone with a heart for culture and diversity extends it to cater for just intoned systems - and all the other equal temperament ones.. ;-)

Musical Passion

Learning an instrument is hard work with some serious challenges. Learner enthusiasm often boils down to a mix of early identification, high quality role models, focussed listening, a social context, a fundamental belief in own abilities and the thrill of the unknown. Any learning environment has to support and build on these.

Online learning is devoid of a social context, and can, with production methods focussed around mass learning, eliminate much of the spontaneity and enthusiasm flowing between teacher and learner.

If easy to become bored and disengaged with online or TV series with budgets running into the millions, it may be of little wonder that music learners abandon often dry online courses focussed on technique, repertoire and musicality and produced with a budget of thousands.

While good tools will find application, peer-to-peer video chat teaching is at the mercy of the online teachers themselves. The platform can, however, help with core genre specific links.

Clearly, it is in everyone's interest to keep the channels for musical passion as open as possible. To my mind, however, this is primarily a matter of teacher creativity, the best any platform can offer being high transparency.

Security

Site integrity (user security and intellectual property) is constantly at risk with any internet-connected device.

SVG (Scalar Vector Graphics) has been described as 'scriptable vector graphics', and as such represents perhaps the single most substantial vulnerability in the concept. In particular, it is possible to execute 'weaponised' javascript from within SVG.

A failure to separate data from code is a problem fundamental to software security. SVG does it by default. Vulnerabilities (sorry for the jargon):

It makes sense, then, that we follow some simple guidelines:

Big topic. More to follow..

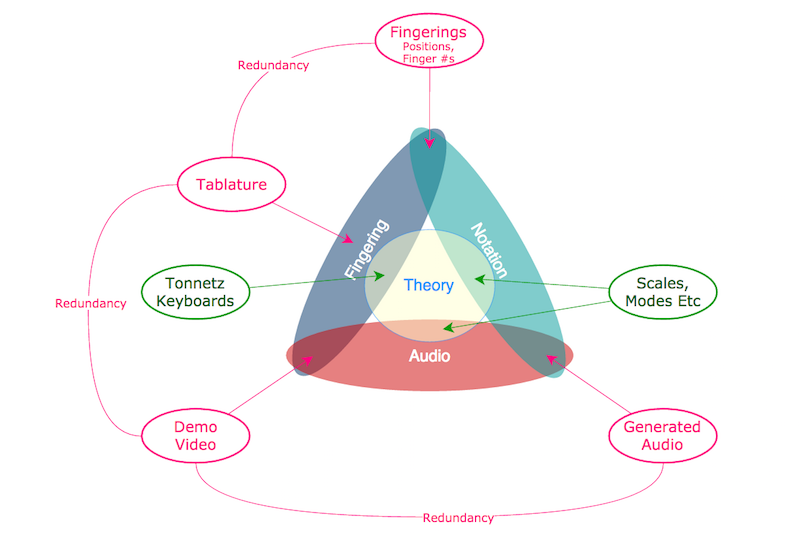

Visual Redundancy

Many online notation programs feature some degree of visual redundancy (for example, tablature and instrument model fingerings and chord diagrams) are quickly and sometimes unwittingly transformed into information overload. While giving the user choice about how information is to be presented, it's probably a good idea to police potential redundancies on his or her behalf.

Left- Or Right- Handed Play

A tiny minority of instrumentalists are lucky enough to call themselves ambidextrous. Most of us, however, have a clear strength in either right- or left-handed play.

Widely overlooked and ignored in teaching books, in a data or music visualization sense it might -given the right dimensioning and placement choices- just prove trivial. I see one-click change of instrument orientation as a very worthwhile goal, and certainly a topic for further exploration before release of any 'template' or 'best practice' code examples into the internet wilds.

Summary

Perhaps because complexity and bloat have, wherever possible, been avoided here, many design decisions lie contrary to widespread practice. The results were instead arrived at under careful vetting and over an extended period of time, with little pressure to conform.

Here something of a road map for this platform and it's future.

Keywords

online music learning,

online music lessons

distance music learning,

distance music lessons

remote music lessons,

remote music learning

p2p music lessons,

p2p music learning

music visualisation

music visualization

musical instrument models

interactive music instrument models

music theory tools

musical theory

p2p music interworking

p2p musical interworking

comparative musicology

ethnomusicology

world music

international music

folk music

traditional music

P2P musical interworking,

Peer-to-peer musical interworking

WebGL, Web3D,

WebVR, WebAR

Virtual Reality,

Augmented or Mixed Reality

Artificial Intelligence,

Machine Learning

Scalar Vector Graphics,

SVG

3D Cascading Style Sheets,

CSS3D

X3Dom,

XML3D

Online Music Education,

Remote Music Education

Equal Temperament,

Just Intonation

world music visualization

world music visualisation

ANY: Music System ◦ Score ◦ Voice ◦ Instrument Or Theory Tool Config ◦

World Music's DIVERSITY and Data Visualisation's EXPRESSIVE POWER collide. A galaxy of INTERACTIVE, SCORE-DRIVEN instrument model and theory tool animations is born.

Entirely Graphical Toolset Supporting World Music Teaching & Learning Via Video Chat ◦ Paradigm Change ◦ Music Visualization Greenfield ◦ Crowd Funding In Ramp-Up ◦ Please Share

Featured Post

All Posts (*** = Recap)

- User Environment - Preferences and Population

- Comparative Musicology & Ethnomusicology

- Music Visualisation - a Roadmap to Virtuosity ***

- A Musical Instrument Web Repository

- Toolset Supported Learning Via Video Chat

- Key Platform Features ***

- Platform Aspirations

- Aggregator Platform vs Soundslice

- Music, Machine Learning & Artificial Intelligence

- World Music Visualisations And The 3D Web

- Cultivating Theory Tool Diversity

- Cultivating Instrumental Diversity

- Cultivating Notation Diversity

- Cultivating Music System Diversity

- One URL To Animate Them All And In The Browser Bind Them ***

- Music Visualisation: Platform Overview

- Music Visualisation: Challenges

- Music Visualisation: The Key To Structure

- Music Visualisation: Motivation ***

- Music Visualisation: Key Concepts ***

- Music Visualisation: Social Value

- Music Visualisation: Prototype

- Music Visualisation: Catalysts

- Music Visualisation: Platform Principles

- Music Visualisation: Here Be Dragons

- Music Visualisation: Potential

- Music Visualisation: The Experimental Edge

- Music Visualisation: Business Models

- Music Visualisation: Technical Details

- Music Visualisation: (Anti-)Social Environment

- Music Visualisation: Arts, Crafts, Heritage

- Music Visualisation: Politics

- Music Visualisation: Benefits ***

- PROJECT SEEKS SPONSORS

- Consistent Colour Propagation

- Orientation: Genre-Specific Workflows

- P2P Teacher-Student Controls

- Musical Modelling

- Social Media Integration

- Platform Provisioning In Overview

- Generic to Specific Modelling: A Closer Look

- Notation, Standardisation and Web

- Communication: Emotional vs Technical

- Musical Storytelling

- Product-Market Fit

- Monthly Challenge

- World Music Visualisations and Virtual Reality

- World Music Visualisations: Paradigm Change?

- How Does It All Work Together?

- Off-the-Shelf Animations: Musical Uses

- MVC vs Data Driven

- A Reusable Visualisation Framework

- What IS Music Visualisation?

- Questions Music Visualisations Might Answer

- Google Group for World Music Visualisation

- Instrument Visualization: Fingering Controls

- Static, Active, Interactive, Timeline-Interactive

- Visualising Instrument Evolution

- Unique Social Value (USV)

- Instrument Modelling: Lute Family

- Person-to-Person ('P2P') Music Teaching

- Alternative Music Visualizer Scenarios

- Music Visualisation: Why a platform, not just an app?

- Music, Dance and Identity: Empowerment vs Exploitation

- Why No Classification System For Music Theory?

- Musical Heritage, Copyright and Culture

- Visualisation Aggregator Platform - Musical Immersion

- Instrument Models - Complete Configuration Freedom

- Diversity is Health, Contentment is Wealth

- Politics & Provisioning Mechanisms of World Music Learning

- Technology, Tribes, Future and Fallout

- Rule-Based vs Data-Driven Notation

© Copyright 2015 The Visual Future of Music. Designed by Bloggertheme9 | Distributed By Gooyaabi Templates. Powered by Blogger.

Comments, questions and (especially) critique welcome.