How can world music visualization (or visualisation) and the 3D web (WebGL-based Web3D, i.e. WebAR and WebVR) be brought together for broad, cross-cultural social value generation?

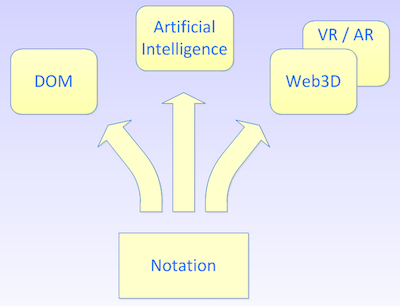

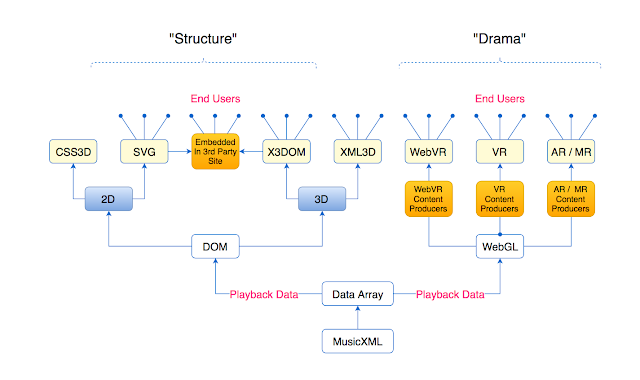

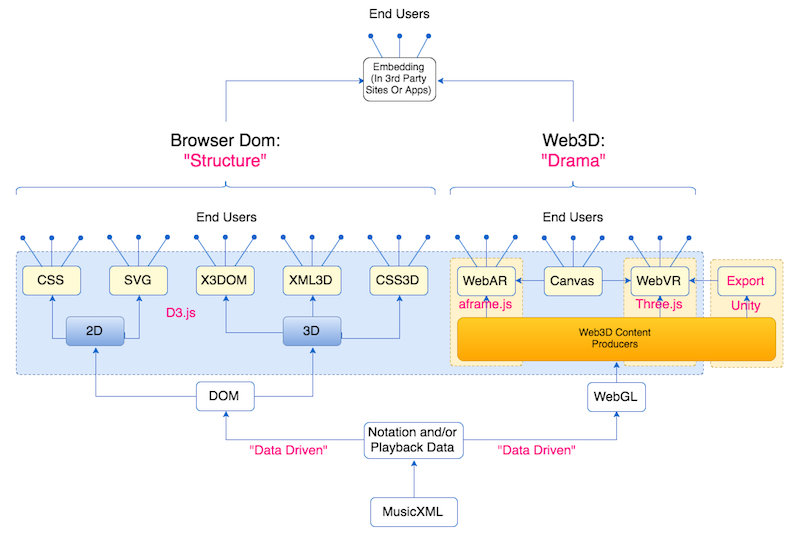

I'd like to briefly revisit the overview of augmented and virtual reality) stacks introduced in a much earlier post, this time focussing in on the 3D web offerings (those on the right-hand side of the diagram to the left), with the aim of narrowing down the field.

These can (in some cases) comprise repurposed 2D web stack technologies such as CSS and SVG, but also open (but not necessarily W3C-governed) 3D standards based libraries such as XML3D, X3DOM, and the various WebGL-based (Web3D, ie WebAR and WebVR) approaches. The focus is on a small but hopefully reasonably durable selection.

In current music visualization, the basic shapes being manipulated are often 3D ones, that is to say with volume. In music we are generally more concerned with latticework structures in some plane, comprising nodes (notes) and connectors (intervals). As regards choice of implementation environment, this may be an important distinction. While nodes and connectors can of course be portrayed using 3D elements, it suggests that full 3D may be visual overkill.

BTW, with WebGL 2.0 adoption central to much that follows, you might like to take a look at the corresponding table on the 'can I use' (canIuse.com) page.

I see at least three contexts under which a music source might be used as a 'driver' of on-screen events. Clearly, some form or music exchange file may be helpful in all three contexts:

- 'Literal' music learning (structural, with known, visually modeled instrument and theory tools). This is relevant both to self-directed and remote or directed learning.

- 'Figurative' (ad-hoc, gamified, dramatic) music learning. Here we can begin to anticipate 'social' learning, where the group and interaction promise a more immersive experience.

- Directly music-driven gaming

We can think of these in a DOM/SVG and a WebGL/Web3D context:

As you can see, conventional MusicXML exchange files may, after parsing and depending on application, be able to provide both domain data, object skins (in the form of image captures) for transfer into Web3D workflows, and a substantial amount of layered modeling knowledge. Given the diversity of the contributing animations, the possibilities here seem endless: I leave you think this through..

Music-driven games are those where a music data stream has an impact on the game narrative equivalent to and on a par with user actions: in effect an additional control stream. There are many ways of exploiting this, from associating object color, shape, family or other behavior with any of pitch, octave, note position in octave, through to memory challenges involving note names in various cultures, or familiarity with individual parts or voices.

DOM VS WebGL

So why, if our main focus is the desktop, it's generous screen real estate and better bandwidth, the browser and pin-sharp interactive 2D visualizations, should we interest ourselves for 3D and the gamer's worlds?

For one, visually simpler forms of 3D are reasonably easily integrated into our aggregator platform's envisaged Theory Tools arsenal (see the Theory Tools menu below the banner image, or the related Pinterest pages). On the other hand, full WebGL-based environments offer a quite different learning experience, and this inevitably signals opportunity.

As insights into the correlation between imagined (visualized) and physical practice is better understood, I expect the impact of research on cognition and learning to be especially strong on the Web3D side. Who knows what will come: it may be that thousands of hours of physical practice familiarizing oneself with modal scales, for instance, can be condensed into a fraction of the time through forms of artificial- and virtual reality assisted learning for the moment barely imaginable. Artificial intelligence is sure, too, to bring huge gains.

Even visually less structured WebGL-based 3D needs, however, to get it's musical data from somewhere - preferably in real time, and clearly and consistently sliced and diced. My feeling is that the DOM tree / SVG world may be able to help out in this respect by providing image skins for WebGL object.

BTW, though it doesn't touch on other than navigational interactivity, here a gentle intro to 'real-time' rendering for the 3D web.

By the way of orientation, here a quick overview of some of the more visible landmarks in the 3D modeling world. We'll come back to some of these later.

DOM or WebGL: Choices And Challenges

The browser's DOM provides us with an exceptionally powerful selection mechanism targeting each DOM element's id and/or class. These allow us not only to maintain behavioural consistency across a wide range of animations, but to finely target individual elements for change. This capability is more or less lost when we move across to WebGL.

It would be helpful if all graphical processing -including that associated with conventional 2D- could be done on the GPU. It turns out that without resorting to WebGL, this isn't so easy. There are libraries claiming to do this, but as they haven't yet gained much traction, I'm wary.

At it's simplest, you need only a browser to experience 3D on the web. Stripped of cosmetics and kept (from a rendering point of view) simple it can also be reasonably easy on the GPU.

To experience Web3D you -additionally- need a headset. With the arrival of high resolution and frame rate WebVR and WebAR capable devices, 'mobile-first' is losing ground to the notion of 'data first'. In the background, and despite web standards, the ongoing nuisance of proprietary technologies tying users to a particular hardware or software ecosystem.

MusicXML As Driver Of Narrative

Music is a language, every piece of music a storyline or narrative, with own unique dynamics, tension and emotional impact.

In this sense, data extracted from MusicXML is a prime candidate, both within our aggregator platform and via public API, as an additional driver of dramatic, gaming-style virtual storylines.

That said, rigorous method and learning needn't be at odds with imagination and whimsy. To this extent, everything, really everything, must be data-driven.

We're talking on-demand, freely transformable, musical visualizations: making (for example) music notation bendable, hidable, peek-a-boo visible, twistable, flippable, riot-of-colorable, capable of being turned into jungle flowers, racing cars, dancing dolphins, rolling waves, or classic Disney-style songbirds on telegraph lines.

Transformations as part of an ongoing and developing storyline are one thing, responding to user actions in real time another. The next challenge? Instant, onscreen, programmatic reconfiguration according to changing user needs. For instruments, for example, the ability to freely change core music visualization characteristics such as number of notes per octave, temperament / intonation, or (lute family) to add a capo.

Another challenge: where 2D SVG (used for instrument models, see menus at head of page) is prepared on the CPU, embedded-canvas, WebGL-based content (potentially, 3D theory tools: again, see menus), on the GPU, can both be synchronized via hardware clock, using (for example D3.js)? I certainly think so.

The last challenge? Whether, under some dual DOM/WebGL scenario (of which there are a few) the system as a whole can maintain synchronization under load, such as for example between animations and their associated audio. For want of a better term, think 'load balancing'. For me, it's a bit of an unknown.

So, in summary, with these clearly in mind:

- Selection for visual consistency

- Graphical processing (including conventional 2D) if possible on the GPU,

- Real-time, data-driven visualisations

- .. and hence on-screen reconfiguration

- hardware clock synchronisation across 2- and 3D technology stack branches

- 2D and 3D load balancing

..we are ready to start thinking about their application. Is your goal teaching and learning transparency - or gaming and infotainment? Does 3D offer any gain over 2D in the browser? Best thought through carefully before prototyping.

DOM or WebGL: Technology Review

*News Flash*

Particularly intriguing amongst emerging WebGL-based approaches is

Stardust, whose API and focus on solving the data bindings bear similarities to that of d3.js, but which leverages the

GPU — all while claiming to remain platform agnostic.

Complementing rather than replacing d3.js, instead of mapping data to the DOM, Stardust maps data to and array of GPU-rendered graphical elements known as ‘marks’. Developers with D3 expertise are expected to adopt easily to Stardust.

Where D3 provides the better support for fine-grained control and styling on a small number of items, Stardust is profiled as good at bulk-rendering-and-animating of large numbers of marks via parameters.

Indeed, in this context, Stardust’s creators suggest a mix of the two in applications: D3 being used to render (for example) a scatterplot’s axes and handle interactions such as range selections, but Stardust used to render and animate the points.

*News Flash End*

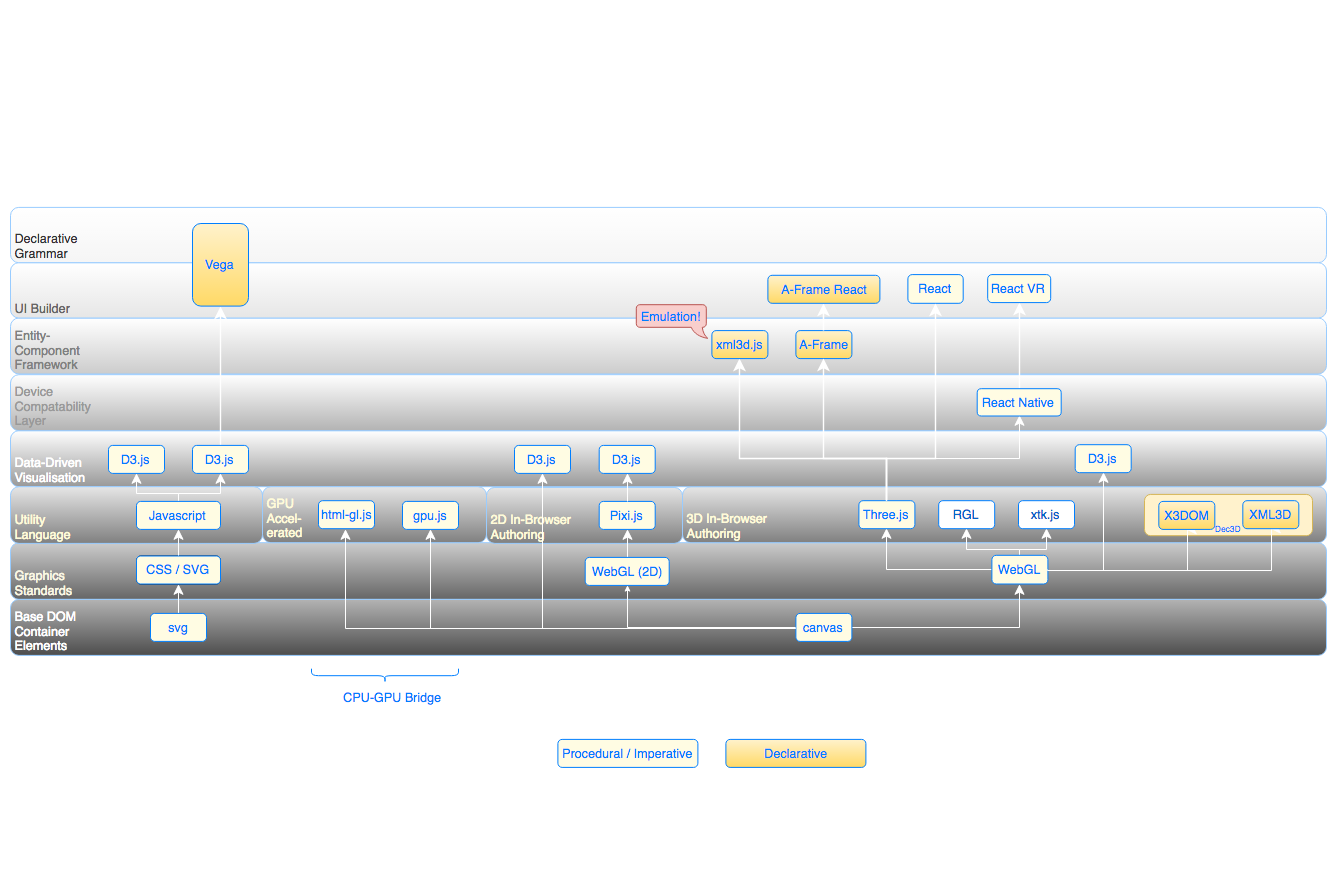

Our world music aggregator platform supports anything that can be dynamically loaded and run in a single page application, and javascript libraries can wrap just about anything. So: pure DOM? HTML canvas? WebGL on the DOM via canvas? WebAR or WebVR on the DOM? With d3.js or it's declarative wrapper, Vega? R? XTK? X3DOM or XML3D? What actually works - and how can we narrow the field?

Incidentally, I'm just curious as to future animation integration possibilities - and though as adept a faker as the next, certainly no expert. If you spot mistakes, do let me know.

As a reminder, on the left, the (on the whole) declarative, browser DOM technologies, on the right the (on the whole) procedural / imperative 3D polygonal wireframe world of AR/MR/VR. The web DOM space is focussed (on the whole) on structured data and understanding, the VR space on ad-hoc, many-to-many-related objects, the dramatic experience, suspension of disbelief and immersion in the storytelling flow. As concepts go, poles apart..

As can be seen, the Unity (and, by proxy, other) content delivery platforms for 3D games, augmented and virtual reality can also target (export content to) the WebVR, and, increasingly, WebAR stacks.

Big, brave, open-source, non-profit, community-provisioned, cross-cultural and kickass crazy. → Like, share, back-link, pin, tweet and mail. Hashtags? For the crowdfunding: #VisualFutureOfMusic. For the future live platform: #WorldMusicInstrumentsAndTheory. Or simply register as a potential crowdfunder..

3D Web Closer Up

Let's immediately distinguish between the term Web3D (referring to all interactive 3D content embedded into web pages html, commonly powered by WebGL, that we can see through a web browser, and often known by one of WebVR or WebAR) and Augmented and Virtual Reality (A & VR), which tend to run on dedicated devices.

VR objects are modelled, textured, and rendered in lengthy pre-production workflows using (in many cases free) 3D modelling tools such as Maya, Unity, Unreal and Blender. WebVR and WebAR are an attempt at bridging the gap - directly in the browser.

Web3D's convenience lies in lower development overhead and that WebVR and WebAR can be experienced both in the browser, yet also on many full-blown VR devices. The buzz? Faster delivery, more users. The biggest obstacle is the lack of native inputs, significantly impeding user interaction. Indeed, many examples handle the VR's "six degrees of freedom" (forward/back, up/down, left/right, yaw, pitch, roll) beautifully, but can end up leaving object data content inaccessible. As affairs go, "it's complicated".

Meanwhile, with WebGL now widely supported by default in modern browsers, further technologies such as X3D, X3DOM, Cobweb, three.js, glTF, ReactVR and A-Frame VR are steadily extending 3D capabilities within the web stack.

Can these match or enhance the 2D visually transformative capabilities of D3.js + SVG? Let's take a little stroll from left to right through the possibilities.

WebGL

WebGL is founded on OpenGL, and provides a base for a small range of 3D modelling libraries, such as Playcanvas, Turbulenz, BabylonJS and Three.js. As WebGL is canvas-based, the graphical sophistication is not on a par with SVG, but for solids and planes, the results can still be impressive.

The Role of D3.js

In the conventional 2D web, SVG graphics elements can be scripted with JavaScript for CSS and DOM interactivity (onclick, onmouseover etc). Javascript is, however, procedural. Vega, a 'visualisation grammar' (and wrapper for D3.js), provides us with a declarative, data-driven interface. "One unique aspect of Vega is its support for declarative interaction design: instead of the “spaghetti code” of event handler callbacks, Vega treats user input (mouse movement, touch events, etc.) as first-class streaming data to drive reactive updates to a visualisation".

With it's declarative and reactive approach, Vega hints at the possibility of HTML-embeddable and highly interactive scores.. More on that in a later post.

For the 3D web, D3 eases manipulation of the likes CSS, XML3D, X3DOM and CSS3D, but can also be used in conjunction with web component, routing and XHR libraries such as React, Vue and Mithril.

D3.js or Vega can, incidentally, be used directly in conjunction with WebGL or (fallback) canvas.

Three.js

Three.js is a cross-browser JavaScript library/API used to create and display animated 3D computer graphics in a web browser. Three.js uses WebGL.

In an enablement sense, Three.js is to WebGL as D3.js is to the DOM. As with D3.js, it spawned a remarkable ecosystem of derivation and enhancement libraries, some with substantial followings of their own.

CSS

HTML describes structure and CSS described visual presentation. (In the diagram I've grouped CSS under 'Structure' - in the sense that it forms part of the arsenal of tools surrounding the highly structured browser DOM).

CSS has something of a reputation as a 'poor cousin' in the animated graphics world, but is a powerful tool in maintaining style consistency across a wide range of graphical objects. Moreover, should the choice be made to manipulate CSS styles directly in code rather than using style sheets, this can be managed with a high degree of control within D3.js, via the style attribute in conjunction with class and id attributes.

In effect these allow CSS styles to be inherited much as in the accustomed style sheets. You can get a feel for the simplicity and elegance of CSS use in conjunction with D3.js here.

In recent times, CSS has been the focus of more advanced graphical tools development. Here, for example a tool for advanced CSS path clipping.

SVG

Scalar Vector Graphics (SVG) is a declarative language associated with seamlessly scalable (pin-sharp at all sizes) graphics. Basic shapes such as those used in our instrument and theory models are supported across pretty much all browsers.

With all the overhead of the underlying DOM manipulations, however, the CPU is quickly overwhelmed by SVG processing, yet getting the processing to execute on the GPU can be quite a challenge. In some respects, the overheads mirror those of Web3D pipeline workflows.

SVG is more suited to limited but data-rich animations, such as interactive maps, board games - and of course music visualisations. The range and complexity of graphics that can be produced is huge - and they scale seamlessly to any size. SVG is the basis of the aggregator platform in focus here.

Incidentally, conventional 2D graphic (web) designer's animation tools (used, for example, in the non-programmatic creation of SVG art) are unsuited to the creation of dynamic, data-driven music visualisations as in focus here, and of only very limited use in a VR or, indeed, WebVR context. Nevertheless, sophisticated 2D programmatically controlled visualisations are well established in the web browser.

The main problem with a SVG (here D3.js-driven) is there is currently no way of forcing the DOM and graphical donkey-work to be done on the GPU. With all the overhead of the underlying DOM manipulations, the CPU is quickly overwhelmed. To execute on the GPU, D3.js can be used in conjunction with WebGL or (fallback) canvas, but thereby loses much of it's visual expressiveness.

SVG usage is likely to increase as (especially mobile device) CPU and GPU clout improves in the wake of the current augmented and virtual reality experimentation. The real breakthroughs will come if and when DOM and WebGL technologies are successfully brought together on a workable scale, and if the W3C can revitalise the ongoing SVG standardisation roadmap.

Mozilla maintains a page providing guidelines for SVG coding, with the aim of cutting down on client-side processing overheads.

Dec3D (Declarative 3D): With XML3D Or X3DOM

Dec3D (Declarative 3D) is a collective name for XML3D Or X3DOM technologies, and is described here.

xml3d

Judging by the images to be found using Google search, xml3d seems to have found favour as a modelling language in manufacturing industries, but does not seem to have caught on amongst web developers. The online examples, though promising, are few.

In the current implementation, xml3d is delivered as a javascript file, xml3d.js, and runs on any browser with WebGL support. The documentation (a Wiki) appears concise, sufficient and well structured.

xml3d supports JSON and (needless to say) XML input formats, the latter of which raises some interesting thoughts in connection with MusicXML.. :-)

With support for events, I see no reason why xml3d should not be used in conjunction with D3.js to create comparatively complex and interactive animations.

x3dom

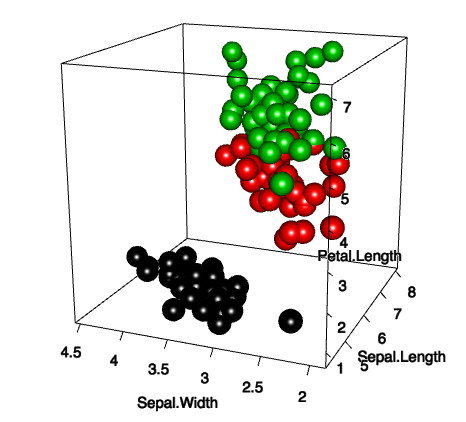

For the web, such a thing does indeed exist - in the form of x3dom. D3.js's creator Mike Bostock was quick to demonstrate the union of D3.js and x3dom. Others followed, culminating in examples that begin to show promise in the context of lightweight, graphical music theory modelling. More examples here and here.

As the x3dom web site puts it:

"The goal here is to have a live X3D scene in your HTML DOM, which allows you to manipulate the 3D content by only adding, removing, or changing DOM elements. No specific plugin or plugin interface (like the X3D-specific SAI) is needed. It also supports most of the HTML events (like "onclick") on 3D objects. The whole integration model is still evolving and open for discussion.

We hope to trigger a process similar to how the SVG in HTML5 integration evolved:

- Provide a vision and runtime today to experiment with and develop an integration model for declarative 3D in HTML5

- Get the discussion in the HTML5 and X3D communities going and evolve the system and integration model

- Finally it would be part of the HTML5 standard and supported by every major browser natively"

|

While not providing quite the control we have using SVG, it does give us access to CSS styling and events, is declarative, part of the HTML document - and hence integrated with the DOM.

CSS3D

Despite early interest in and some interesting experiments in conjunction with D3.js, CSS3D has yet to really take off. Though very good at handling simple pythagorean and platonic solids, perhaps the challenges of putting together complex shapes has discouraged it's use in comparison to the wireframe shapes of WebGL. With the arrival of CSS3D graphical editor such as Tridiv, perhaps that will change.

For the meantime, to see what's possible on CSS3D's cutting edge, wrap yer jellybeans round this. A couple of years old, but still seething with promise.

There have been numerous attempts at creating libraries of basic shapes - either via web components, or using visualisation libraries, as in the case of d3-x3dom-shape.

Declarative? Procedural/Imperative?

Before taking a look at finer-grain developments in the 3D web, a look at the distinction between declarative and procedural (a.k.a. imperative) languages. Just skip this block if you are comfortable with the distinction.

Declarative approaches describe what you want to do, but not how you want to do it. In a graphics context you would simply state your graphic object's properties, such as position, shape and size, the rest being done for you. In 'hiding' unnecessary detail, this can lead to very much more compact code, but is often associated with a steeper learning curve.

Both Vega (a wrapper library for D3.js) and Scalar Vector Graphics (SVG) are declarative languages. Given their succinctness and power, it is little surprise that they are associated respectively with complex data-driven applications, and high-precision graphics.

In a 3D DOM context, declarative approaches include those of XML3D, X3DOM and CSS3D. Of these, X3DOM appears to have found the most resonance in conjunction with D3.js / Vega. CSS3D is adequate for manipulating simple pythagorean and platonic solids, but there are no facilities for handling more complex graphics objects such as Bezier curves.

A procedural approach executes a series of well-structured -and often fairly detailed- steps. In a graphics context, a procedural language allows you give commands to draw from scratch - as if directing a paint brush's moves. Though the workings can be more transparent, reusability and integrity can more difficult to manage.

Though it's core technologies (HTML and CSS) are declarative, the HTML 'canvas' element is a fast, procedural / imperative interface to bitmap-based, low-level graphics. JavaScript is used in conjunction with Canvas to paint things to the screen. It offers little in the way of graphical elements, and these do not scale well. Suited to bitmapped games, Canvas found use in Soundslice, a fast and successful synchronised notation/instrument/video learning environment for a few of the most popular musical instruments and genres.

A declarative approach is perhaps half as verbose as an imperative one, but can easily take twice the time to master. The results, however, are much more elegant and easily supported code.

Finer-Grain 3D Web Focus

Finally, we try to get a handle on the more recent and specific initiatives in the 3D web space, and especially those of possible use in a music context.

In many cases, these build on or combine the technologies already introduced. As it's easy to get lost in the forest of experimental libraries, we'll stick to the main ones. That means some of what you see in the diagram may find no mention below.

In contrast to augmented and virtual reality, which is often build on platform- rather than web-oriented languages such as C#, the 3D web space tends to be stitched together with javascript.

This is an important distinction, both in exposing us to a vast, rich and growing development ecosystems such as web components (shadow DOM and reuse), but also wider developer availability. Moreover, whatever happens in the more rarified AR / VR space, web connectivity is likely to remain central.

ReactVR

Working from the bottom up, we quickly arrive at the first newcomer, ReactVR (or React VR).

Much as D3.js allows us to build complex dynamic and interactive graphics on the browser's DOM, ReactVR allows us to build and position 3D graphical components in a 3D space. From the initial React VR release statement:

"Expanding on the declarative programming style of React and React Native, React VR lets anyone with an understanding of JavaScript rapidly build and deploy VR experiences using standard web tools. Those experiences can then be distributed across the web—React VR leverages APIs like WebGL and WebVR to connect immersive headsets with a scene in a web page. And to maximize your potential audience, sites built in React VR are also accessible on mobile phones and PCs, using accelerometers or the cursor for navigation.

With ReactVR, you can use React components to compose scenes in 3D, combining 360 panoramas with 2D UI, text, and images. You can increase immersion with audio and video capabilities, plus take full advantage of the space around you with 3D models. If you know React, you now know how to build 360 and VR content!"

ReactVR basically allows front-end developers currently creating websites to transition to creating WebVR sites.

A-Frame React

In principle this is two topics in one. A-Frame is a web framework built on top of the DOM, which means web libraries such as React, Vue.js, Mithril.js and d3.js sit cleanly on top of it.

A-Frame is an entity-component-system (ECS) framework exposed through HTML. (ECS is a pattern used in game development that favors composability over inheritance, which is more naturally suited to 3D scenes where objects are built of complex appearance, behavior, and functionality).

In A-Frame, HTML attributes map to components which are composable modules that are plugged into s to attach appearance, behavior, and functionality.

A-Frame provides an actual DOM for React to reconcile, diff, and bind to.

aframe-react is a very thin layer on top of A-Frame to bridge with React, and with it web components and reuse.

aframe-react lets A-Frame handle the heavy lifting 3D and VR rendering and behavior. A-Frame is optimized from the ground up for WebVR with a 3D-oriented entity-component architecture. And aframe-react lets React focus on what it's good at: views, state management, and data binding.

Pixi.js

From the Pixi.js website: "The aim of this project is to provide a fast lightweight 2D library that works across all devices. The Pixi renderer allows everyone to enjoy the power of hardware acceleration without prior knowledge of WebGL. Also, it's fast. Really fast".

Accessed through a data-driven visualisation library such as D3.js, it can be coerced into visually detailed and expressive behaviours. Certainly, for the simple shapes employed in our instrument models, Pixi.js looks like a viable alternative to SVG - with the advantage of being processed on the GPU. This is definitely something I'd like to see tested with an eye to use for our open source animations templates.

Pixi.js has been used within Google Chrome's own Music Lab (showcasing experiments with the Web Audio APi) to provide fast and smoothly animated graphical elements.

For all that, though, the range of basic shapes offered is limited.

Hybrids

To round off, attempts have been made (for example html-gl.js) to get DOM-located graphics such as SVG to be be calculated on the GPU rather than the CPU, or to bring powerful but essentially platform-dependent solutions to the web (RGL, xtk.js).

At the end of the day, a data-driven notation feed can be both to DOM and Web3D, but the way they are applied will differ radically. Notation, instruments and theory on the DOM, action, adventure and whimsy on the other. In both, however, a clear chance to better anchor music learning.

My gut feeling is that D3 can add a level of sophistication to pretty much any of the tools covered in this post. That is valid as much for SVG as WebGL based approaches. It's worth taking a look at the various 2- and 3D animation demos available on the internet. A number of examples bring remarkably similar results

You can gain a little more insight on the transition from 2D web to 3D virtual reality from the user's perspective from this thesis publication.

There are countless finely nuanced technical approaches other than those touched on above, and as we know, wide-spread adoption is sometimes just a matter of luck. A spectacular example, the right moment, a sympathetic and key information broker, a surge of interest in some related field. Nevertheless, if there is one thing we can take away from the last few years experiences with frameworks, it is that less is generally more.

The Future: OpenXR And The 3D Portability API

Producing content for use across multiple environments is a pain. Happily, for Web3D, VR and AR, there is some hope of the various platform APIs being homogenised and unified under a common 'OpenXR' layer, and for these to form the basis of future javascript libraries allowing cross-platform development.

OpenXR will offer two APIs, one application-facing, the other device-facing, acting (depending on viewpoint) as an integration or defragmentation layer for VR and Web3D.

OpenXR will be augmented by a so-called 3D Portability API intended to harmonise various hardware interfaces, which, together with initiatives around open source compilers and translators is hoped will lead to an updated 'WebGL Next'.

Why all the blether? WebGL 2.0 has already brought desktop and mobile platforms 3D capabilities much closer together. WebGL Next is expected to continue this trend.

Keywords

online music learning,

online music lessons

|

distance music learning,

distance music lessons

|

remote music lessons,

remote music learning

|

p2p music lessons,

p2p music learning

|

music visualisation

music visualization

|

musical instrument models

interactive music instrument models

|

music theory tools

musical theory

|

p2p music interworking

p2p musical interworking

|

comparative musicology

ethnomusicology

|

world music

international music |

folk music

traditional music

|

P2P musical interworking,

Peer-to-peer musical interworking

|

WebGL, Web3D,

WebVR, WebAR

|

Virtual Reality,

Augmented or Mixed Reality

|

Artificial Intelligence,

Machine Learning

|

Scalar Vector Graphics,

SVG

|

3D Cascading Style Sheets,

CSS3D

|

X3Dom,

XML3D

|

Comments, questions and (especially) critique welcome.